I upgraded my home internet connection to fibre (FTTP)

last October. I m still on an 80M/20M service, so it s no faster than my old VDSL FTTC connection was, and as a result for a long time I continued to use my HomeHub 5A running

OpenWRT. However the FTTP ONT meant I was using up an additional ethernet port on the router, and I was already short, so I ended up with a GigE switch in use as well. Also my wifi is handled by a

UniFi, which takes its power via Power-over-Ethernet. That mean I had a router, a switch and a PoE injector all in close proximity. I wanted to reduce the number of devices, and ideally upgrade to something that could scale once I decide to upgrade my FTTP service speed.

Looking around I found the

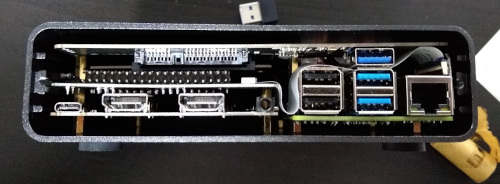

MikroTik RB3011UiAS-RM, which is a rack mountable device with 10 GigE ports (plus an SFP slot) and a dual core

Qualcomm IPQ8064 ARM powering it. There s 1G RAM and 128MB NAND flash, as well as a USB3 port. It also has PoE support. On paper it seemed like an ideal device. I wasn t particularly interested in running RouterOS on it (the provided software), but that s based on Linux and there was some work going on within OpenWRT to add support, so it seemed like a worthwhile platform to experiment with (what, you expected this to be about me buying an off the shelf device and using it with only the supplied software?). As an added bonus a friend said he had one he wasn t using, and was happy to sell it to me for a bargain price.

I did try out RouterOS to start with, but I didn t find it particularly compelling. I m comfortable configuring firewalling and routing at a Linux command line, and I run some additional services on the router like my

MQTT broker, and

mqtt-arp, my wifi device presence monitor. I could move things around such that they ran on the

house server, but I consider them core services and as a result am happier with them on the router.

The first step was to get something booting on the router. Luckily it has an RJ45 serial console port on the back, and a reasonably featured bootloader that can manage to boot via tftp over the network. It wants an ELF binary rather than a plain kernel, but Sergey Sergeev had done the hard work of getting

u-boot working for the IPQ8064, which mean I could just build normal u-boot images to try out.

Linux upstream already had basic support for a lot of the pieces I was interested in. There s a slight fudge around

AUTO_ZRELADDR because the network coprocessors want a chunk of memory at the start of RAM, but there s ongoing discussions about how to handle this cleanly that I m hopeful will eventually mean I can drop that hack. Serial, ethernet, the QCA8337 switches (2 sets of 5 ports, tied to different GigE devices on the processor) and the internal NOR all had drivers, so it was a matter of crafting an appropriate DTB to get them working. That left niggles.

First, the second switch is hooked up via SGMII. It turned out the IPQ806x

stmmac driver didn t initialise the clocks in this mode correctly, and neither did the

qca8k switch driver. So I need to fix up both of those (Sergey had handled the stmmac driver, so I just had to clean up and submit his patch). Next it turned out the driver for talking to the Qualcomm firmware (SCM) had been updated in a way that broke the old method needed on the IPQ8064. Some git archaeology figured that one out and provided a solution. Ansuel Smith helpfully provided the DWC3 PHY driver for the USB port. That got me to the point I could put a Debian armhf image onto a USB stick and mount that as root, which made debugging much easier.

At this point I started to play with configuring up the device to actually act as a router. I make use of a number of VLANs on my home network, so I wanted to make sure I could support those. Turned out the stmmac driver wasn t happy reconfiguring its MTU because the IPQ8064 driver doesn t configure the FIFO sizes. I found what seem to be the correct values and plumbed them in. Then the

qca8k driver only supported port bridging. I wanted the ability to have a trunk port to connect to the upstairs switch, while also having ports that only had a single VLAN for local devices. And I wanted the switch to handle this rather than requiring the CPU to bridge the traffic. Thankfully it s easy to find a copy of the QCA8337 datasheet and the kernel

Distributed Switch Architecture is pretty flexible, so I was able to implement the necessary support.

I stuck with Debian on the USB stick for actually putting the device into production. It makes it easier to fix things up if necessary, and the USB stick allows for a full Debian install which would be tricky on the 128M of internal NAND. That means I can use things like

nftables for my firewalling, and use the standard Debian packages for things like

collectd and

mosquitto. Plus for debug I can fire up things like tcpdump or tshark. Which ended up being useful because when I put the device into production I started having weird IPv6 issues that turned out to be a lack of proper Ethernet multicast filter support in the IPQ806x ethernet device. The driver would try and setup the multicast filter for the IPv6 NDP related packets, but it wouldn t actually work. The fix was to fall back to just receiving all multicast packets - this is what the vendor driver does.

Most of this work will be present once the 5.9 kernel is released - the basics are already in 5.8. Currently not queued up that I can think of are the following:

- stmmac IPQ806x FIFO sizes. I sent out an RFC patch for these, but didn t get any replies. I probably just need to submit this.

- NAND. This is missing support for the QCOM ADM DMA engine. I ve sent out the patch I found to enable this, and have had some feedback, so I m hopeful it will get in at some point.

- LCD. AFAICT LCD is an ST7735 device, which has kernel support, but I haven t spent serious effort getting the SPI configuration to work.

- Touchscreen. Again, this seems to be a zt2046q or similar, which has a kernel driver, but the basic attempts I ve tried don t get any response.

- Proper SFP functionality. The IPQ806x has a PCS module, but the stmmac driver doesn t have an easy way to plumb this in. I have ideas about how to get it working properly (and it can be hacked up with a fixed link config) but it s not been a high priority.

- Device tree additions. Some of the later bits I ve enabled aren t yet in the mainline RB3011 DTB. I ll submit a patch for that at some point.

Overall I consider the device a success, and it s been entertaining getting it working properly. I m running a mostly mainline kernel, it s handling my house traffic without breaking a sweat, and the fact it s running Debian makes it nice and easy to throw more things on it as I desire. However it turned out the RB3011 isn t as perfect device as I d hoped. The PoE support is passive, and the UniFi wants 802.1af. So I was going to end up with 2 devices. As it happened I picked up a cheap

D-Link DGS-1210-10P switch, which provides the PoE support as well as some additional switch ports. Plus it runs Linux, so more on that later

Despite having worked on a number of ARM platforms I ve never actually had an ARM based development box at home. I have a Raspberry Pi B Classic (the original 256MB rev 0002 variant) a coworker gave me some years ago, but it s not what you d choose for a build machine and generally gets used as a self contained TFTP/console server for hooking up to devices under test. Mostly I ve been able to do kernel development with the cross compilers already built as part of Debian, and either use pre-built images or Debian directly when I need userland pieces. At a previous job I had a Marvell MACCHIATObin available to me, which works out as a nice platform - quad core A72 @ 2GHz with 16GB RAM, proper SATA and a PCIe slot. However they re still a bit pricey for a casual home machine. I really like the look of the HoneyComb LX2 - 16 A72 cores, up to 64GB RAM - but it s even more expensive.

So when I saw the existence of the 8GB Raspberry Pi 4 I was interested. Firstly, the Pi 4 is a proper 64 bit device (my existing Pi B is ARMv6 which means it needs to run Raspbian instead of native Debian armhf), capable of running an upstream kernel and unmodified Debian userspace. Secondly the Pi 4 has a USB 3 controller sitting on a PCIe bus rather than just the limited SoC USB 2 controller. It s not SATA, but it s still a fairly decent method of attaching some storage that s faster/more reliable than an SD card. Finally 8GB RAM is starting to get to a decent amount - for a headless build box 4GB is probably generally enough, but I wanted some headroom.

The Pi comes as a bare board, so I needed a case. Ideally I wanted something self contained that could take the Pi, provide a USB/SATA adaptor and take the drive too. I came across the pre-order for the DeskPi Pro, decided it was the sort of thing I was after, and ordered one towards the end of September. It finally arrived at the start of December, at which point I got round to ordering a Pi 4 from CPC.

Total cost ~ 120 for the case + Pi.

Despite having worked on a number of ARM platforms I ve never actually had an ARM based development box at home. I have a Raspberry Pi B Classic (the original 256MB rev 0002 variant) a coworker gave me some years ago, but it s not what you d choose for a build machine and generally gets used as a self contained TFTP/console server for hooking up to devices under test. Mostly I ve been able to do kernel development with the cross compilers already built as part of Debian, and either use pre-built images or Debian directly when I need userland pieces. At a previous job I had a Marvell MACCHIATObin available to me, which works out as a nice platform - quad core A72 @ 2GHz with 16GB RAM, proper SATA and a PCIe slot. However they re still a bit pricey for a casual home machine. I really like the look of the HoneyComb LX2 - 16 A72 cores, up to 64GB RAM - but it s even more expensive.

So when I saw the existence of the 8GB Raspberry Pi 4 I was interested. Firstly, the Pi 4 is a proper 64 bit device (my existing Pi B is ARMv6 which means it needs to run Raspbian instead of native Debian armhf), capable of running an upstream kernel and unmodified Debian userspace. Secondly the Pi 4 has a USB 3 controller sitting on a PCIe bus rather than just the limited SoC USB 2 controller. It s not SATA, but it s still a fairly decent method of attaching some storage that s faster/more reliable than an SD card. Finally 8GB RAM is starting to get to a decent amount - for a headless build box 4GB is probably generally enough, but I wanted some headroom.

The Pi comes as a bare board, so I needed a case. Ideally I wanted something self contained that could take the Pi, provide a USB/SATA adaptor and take the drive too. I came across the pre-order for the DeskPi Pro, decided it was the sort of thing I was after, and ordered one towards the end of September. It finally arrived at the start of December, at which point I got round to ordering a Pi 4 from CPC.

Total cost ~ 120 for the case + Pi.

I managed to break a USB port on the Desk Pi. It has a pair of forward facing ports, I plugged my wireless keyboard dongle into it and when trying to remove it the solid spacer bit in the socket broke off. I ve never had this happen to me before and I ve been using USB devices for 20 years, so I m putting the blame on a shoddy socket.

The first drive I tried was an old Crucial M500 mSATA device. I have an adaptor that makes it look like a normal 2.5 drive so I used that. Unfortunately it resulted in a boot loop; the Pi would boot its initial firmware, try to talk to the drive and then reboot before even loading Linux. The DeskPi Pro comes with an m2 adaptor and I had a spare m2 drive, so I tried that and it all worked fine. This might just be power issues, but it was an unfortunate experience especially after the USB port had broken off.

(Given I ended up using an M.2 drive another case option would have been the Argon ONE M.2, which is a bit more compact.)

I managed to break a USB port on the Desk Pi. It has a pair of forward facing ports, I plugged my wireless keyboard dongle into it and when trying to remove it the solid spacer bit in the socket broke off. I ve never had this happen to me before and I ve been using USB devices for 20 years, so I m putting the blame on a shoddy socket.

The first drive I tried was an old Crucial M500 mSATA device. I have an adaptor that makes it look like a normal 2.5 drive so I used that. Unfortunately it resulted in a boot loop; the Pi would boot its initial firmware, try to talk to the drive and then reboot before even loading Linux. The DeskPi Pro comes with an m2 adaptor and I had a spare m2 drive, so I tried that and it all worked fine. This might just be power issues, but it was an unfortunate experience especially after the USB port had broken off.

(Given I ended up using an M.2 drive another case option would have been the Argon ONE M.2, which is a bit more compact.)

The case is a little snug; I was worried I was going to damage things as I slid it in. Additionally the construction process is a little involved. There s a good set of instructions, but there are a lot of pieces and screws involved. This includes a couple of FFC cables to join things up. I think this is because they ve attempted to make a compact case rather than allowing a little extra room, and it does have the advantage that once assembled it feels robust without anything loose in it.

The case is a little snug; I was worried I was going to damage things as I slid it in. Additionally the construction process is a little involved. There s a good set of instructions, but there are a lot of pieces and screws involved. This includes a couple of FFC cables to join things up. I think this is because they ve attempted to make a compact case rather than allowing a little extra room, and it does have the advantage that once assembled it feels robust without anything loose in it.

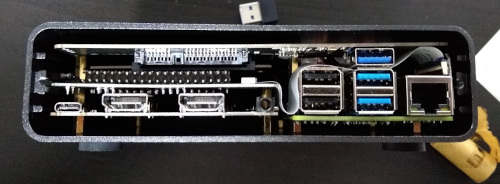

I hate the need for an external USB3 dongle to bridge from the Pi to the USB/SATA adaptor. All the cases I ve seen with an internal drive bay have to do this, because the USB3 isn t brought out internally by the Pi, but it just looks ugly to me. It s hidden at the back, but meh.

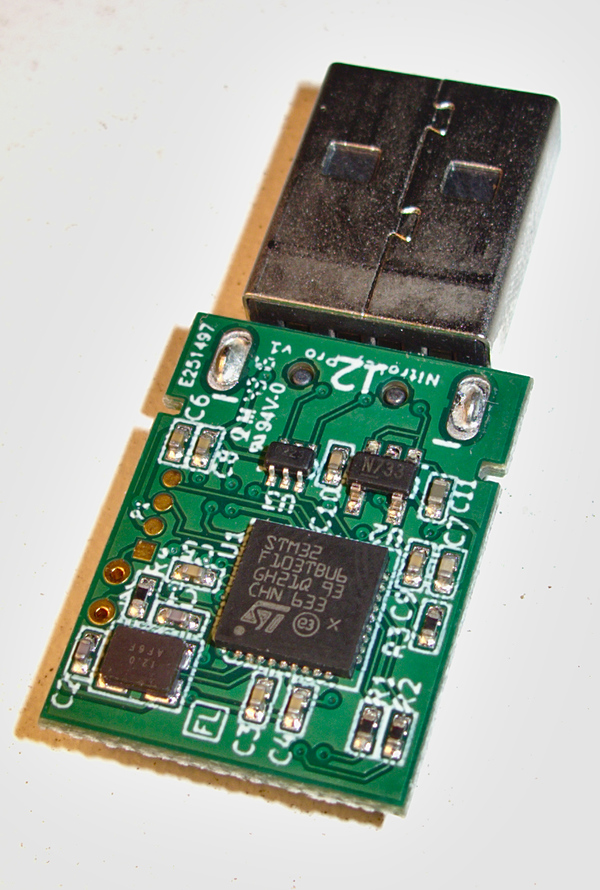

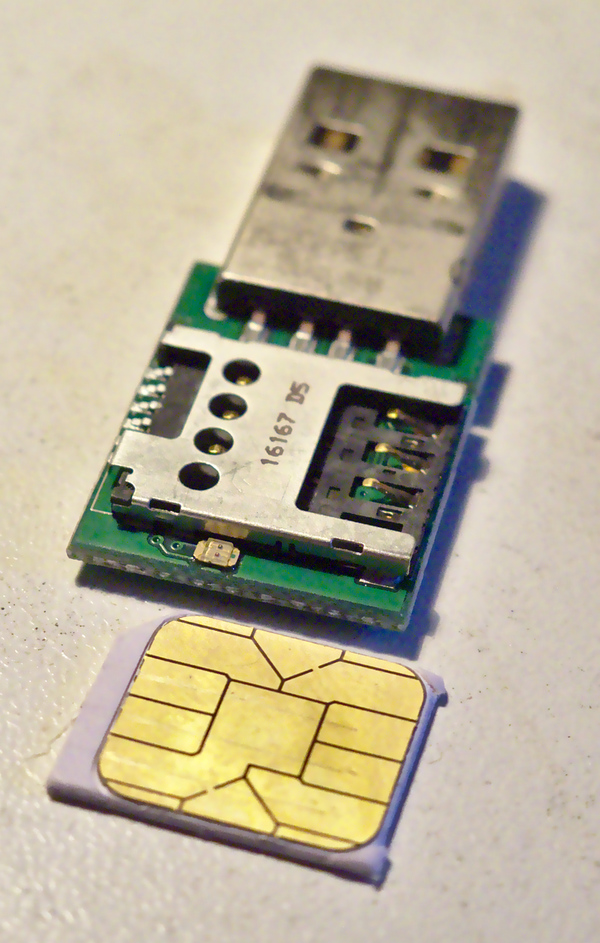

Fan control is via a USB/serial device, which is fine, but it attaches to the USB C power port which defaults to being a USB peripheral. Raspbian based kernels support device tree overlays which allows easy reconfiguration to host mode, but for a Debian based system I ended up rolling my own dtb file. I changed

I hate the need for an external USB3 dongle to bridge from the Pi to the USB/SATA adaptor. All the cases I ve seen with an internal drive bay have to do this, because the USB3 isn t brought out internally by the Pi, but it just looks ugly to me. It s hidden at the back, but meh.

Fan control is via a USB/serial device, which is fine, but it attaches to the USB C power port which defaults to being a USB peripheral. Raspbian based kernels support device tree overlays which allows easy reconfiguration to host mode, but for a Debian based system I ended up rolling my own dtb file. I changed

The case is a little snug; I was worried I was going to damage things as I slid it in. Additionally the construction process is a little involved. There s a good set of instructions, but there are a lot of pieces and screws involved. This includes a couple of FFC cables to join things up. I think this is because they ve attempted to make a compact case rather than allowing a little extra room, and it does have the advantage that once assembled it feels robust without anything loose in it.

The case is a little snug; I was worried I was going to damage things as I slid it in. Additionally the construction process is a little involved. There s a good set of instructions, but there are a lot of pieces and screws involved. This includes a couple of FFC cables to join things up. I think this is because they ve attempted to make a compact case rather than allowing a little extra room, and it does have the advantage that once assembled it feels robust without anything loose in it.

I hate the need for an external USB3 dongle to bridge from the Pi to the USB/SATA adaptor. All the cases I ve seen with an internal drive bay have to do this, because the USB3 isn t brought out internally by the Pi, but it just looks ugly to me. It s hidden at the back, but meh.

Fan control is via a USB/serial device, which is fine, but it attaches to the USB C power port which defaults to being a USB peripheral. Raspbian based kernels support device tree overlays which allows easy reconfiguration to host mode, but for a Debian based system I ended up rolling my own dtb file. I changed

I hate the need for an external USB3 dongle to bridge from the Pi to the USB/SATA adaptor. All the cases I ve seen with an internal drive bay have to do this, because the USB3 isn t brought out internally by the Pi, but it just looks ugly to me. It s hidden at the back, but meh.

Fan control is via a USB/serial device, which is fine, but it attaches to the USB C power port which defaults to being a USB peripheral. Raspbian based kernels support device tree overlays which allows easy reconfiguration to host mode, but for a Debian based system I ended up rolling my own dtb file. I changed

I did try out RouterOS to start with, but I didn t find it particularly compelling. I m comfortable configuring firewalling and routing at a Linux command line, and I run some additional services on the router like my

I did try out RouterOS to start with, but I didn t find it particularly compelling. I m comfortable configuring firewalling and routing at a Linux command line, and I run some additional services on the router like my

Completely forgot to mention this earlier in the year, but delighted to say that in just under 4 weeks I ll be attending DebConf 17 in Montr al. Looking forward to seeing a bunch of fine folk there!

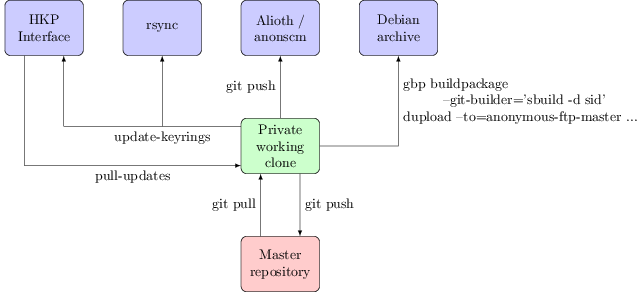

Outbound:

Completely forgot to mention this earlier in the year, but delighted to say that in just under 4 weeks I ll be attending DebConf 17 in Montr al. Looking forward to seeing a bunch of fine folk there!

Outbound: